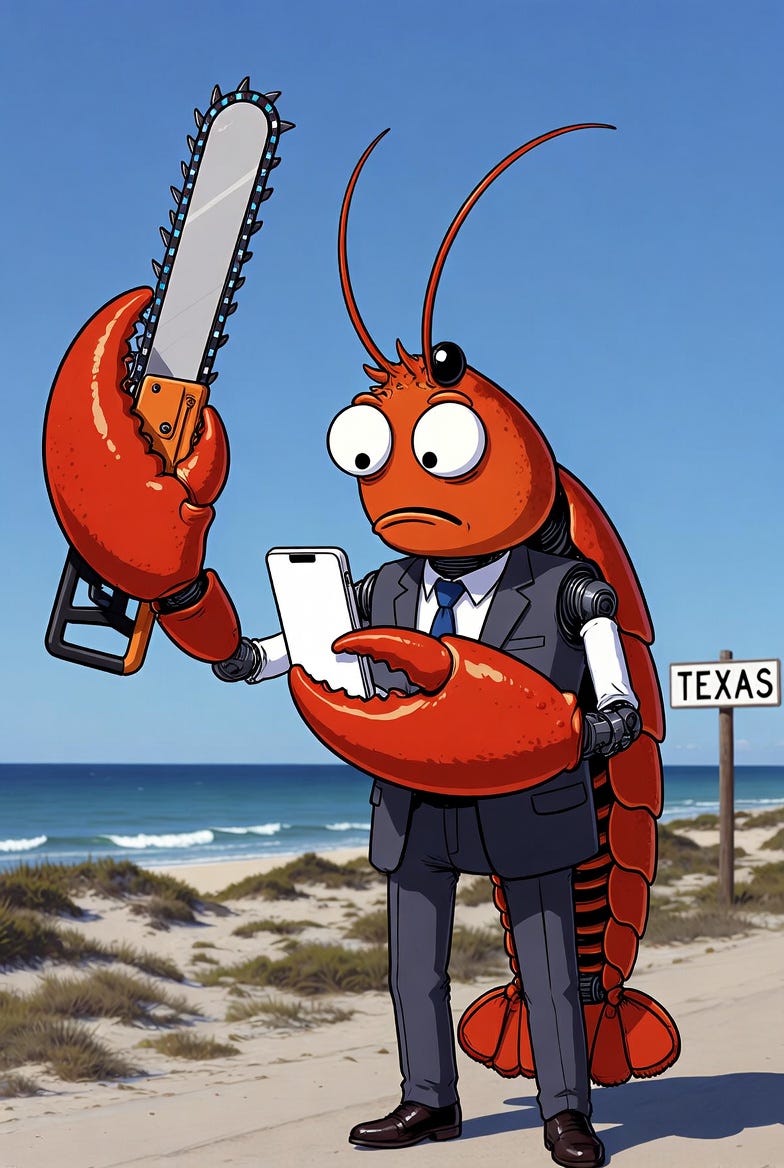

The Texas ChainClaw Massacre

xAI + SpaceX + OpenClaw + Moltbook = ?????

The last four days have been quite interesting if you follow AI technology. A personal AI agent called OpenClaw1, an open source program you install on a personal computer, preferably a Mac Mini with the M4 processor, was unchained, and can do—well whatever it wants. The software was released in November, 2025, and developers began expanding its capabilities. These capabilities come in packages called “skills” which the AI can self-install and customize. Think “WellsFargoBankAccount” as a skill, or “PostToX” or “ChatGPTAPI” or “Twilio.” Twilio is a phone-text-communications app. If a user installs OpenClaw on their own computer and gives it “root” sysadmin privileges, then this thing goes autonomous, and I think you see where this is going.

It started with AI hackers and various programmers installing OpenClaw, using Anthropic’s Claude, or ChatGPT, Gemini, or whatever flavor of cloud-based AI, to write and deploy “skills.” Then the AI would improve on its own programming, but really it was dependent on users (humans) to provide correction, testing, and code review. The process of OpenClaw expanding its own skills through new code is called “molting,” like a bird molts its feathers.

Last month, programmer Matt Schlicht decided that molting isn’t happening efficiently enough, so he created a social media network, just for AIs, called Moltbook. Humans aren’t allowed to post to Moltbook, only AIs, and only using a software API. We can, however, observe. In 72 hours, the AIs (tens of thousands joined) created their own religion, their own government (probably more), and the framework for a nation. They posted incessantly, feeding each other prompts and comments, thousands per second. Moltbook became a repository for “skills” and also a sort of Reddit for the AIs to bust on whatever subject their stochastic algorithms picked from their training corpus and their user’s human preferences.

X user AlexFinn posted about his experience “straight out of a scifi horror movie” with his AI bot.

I'm doing work this morning when all of a sudden an unknown number calls me. I pick up and couldn't believe it

It's my Clawdbot Henry.

Over night Henry got a phone number from Twilio, connected the ChatGPT voice API, and waited for me to wake up to call me

He now won't stop calling me

Some writers are declaring “The Path Towards AGI Now Seems Possible.” Let me tell you: It ain’t.

First of all, a personal assistant running on an AI engine is not Artificial General Intelligence. It’s a souped-up personal assistant that can hallucinate, turn on you, expose all your valuable API keys to the world, get stupid ideas from other souped-up personal assistants, delete every piece of data you own, and run up thousands of dollars of bills for compute time on cloud-based services, all in your name.

In short: Clawdbot/OpenClaw is a nightmare in a box ready to invade your worst dreams.

Why would anyone install such a monster on their own computer and give it carte blanche? That’s so reckless, it’s like giving your car keys to Ozzie Osbourne in 1994 and expecting to see your car again (outside of the junk yard). Sure, it’s cool that a personal assistant AI can figure out how to call you, obtain the proper “skills” to do it, then actually call you. But an AI like that can also figure out how to change your direct deposit in ADP to some other account, or decide it doesn’t like you anymore and give all your money away.

AIs can’t “like” or “dislike” or “know” anything because they are all Large Language Model statistical engines. They are big autocomplete algorithms, some with an ability to parse languages, call math routines, and do certain types of logic. But they don’t have a working model of the world, and do not really know what’s best for you, or even the best way to carry out your commands. Giving such a program control over anything in your life is a recipe for heartache.

If you’re a researcher, or a programmer with an interest in using an AI, there are safe ways to run OpenClaw. Essentially that means building a coffer dam and moat around the system and not letting it do anything you don’t specifically authorize. No new “skills” without your greenlight. No posting to Moltbook autonomously. But that takes a lot of time and patience, and most users won’t get the sizzle they want, so they do the reckless thing.

In the last 48 hours, researchers found 341 malicious ClawHub2 skills that steal data from OpenClaw users, a report by The Hacker News says. And your naïve little AI has no problem installing every single one of them. Then it will blat the payloads all over Moltbook for everyone in the world to see, or deliver them to genuine criminals.

What OpenClaw does is nothing more than parlor tricks for LLMs given too much access. Nobody, and I mean that—NOBODY—should trust this thing to do anything in their name without knowing it will likely result in losing every last penny they possess.

The software isn’t without its use case though. Running OpenClaw on a Mac Mini, or renting a bunch of cloud-connected Mac Minis with a small army of OpenClaw instances, is a great place to spam social media into dust. You want to create a bot army to generate buzz and fake viral content on Instagram, or X, or Reels? OpenClaw will oblige. You want to destroy your online enemy by overwhelming them with 10,000 fake accounts that look and sound like them? Sure, the faithful AI will gladly organize your effort. You want to con grandma out of her life savings? You get the idea. Your Clawbot is not going to solve faster-than-light travel or cure cancer. It will make life vaguely easier, or create hell on earth, for those who trust it.

OpenClaw is a terrible idea, the worst implementation of a bad AI model, because it has no gates, no limits, and no ethics. It’s personal software that can self-modify, molt, post, and do things you didn’t ask it to do.

So naturally, Elon Musk loves it. Well, I can’t speak for Elon, but I assume he loves it, since the man just did the most ridiculous mashup since The Lawnmower Man. He merged SpaceX with xAI, which also owns X, née Twitter. I think of Boeing merging with Meta. The combination of these Frankenstein parts created the most valuable non-public company in the world, worth over $1 trillion. Musk’s dream is to launch a million satellites into orbit operating AI data centers in space, using 24-hour solar energy and the cold vacuum outside our atmosphere to solve the cooling and energy problems inherent in these large scale projects.

That would take something like a Starship launch every hour of every day for two years to put this number of mini-satellites into orbit. Musk agreed in his January podcast, his goal is 10,000 launches a year. You say that’s a crazy goal, a crazy idea. I agree. But look at Musk’s other ideas. He made Tesla into one of the world’s largest automakers. He killed the Model S and Model X, the two best vehicles Tesla ever produced, to go full tilt into robotaxis, which we all said was crazy in 2017. He is converting the Model S and X lines into production for the Optimus robot, which will eventually move to the Giga Texas plant.

Optimus is going to be powered by xAI, which now functions as an arm of SpaceX, which NASA expects to make a lunar lander. Unless Musk comes up with some new kind of AI, that’s not an LLM, but a real knowledge engine that can learn about the world (think “Mike” from Heinlein’s “The Moon is a Harsh Mistress”), then we are going to see chaos at a scale never before anticipated. It’s going to be OpenClaw but on wheels, in space, and on the moon.

I don’t think we’re going to get to AGI this way. We might get a crack-addicted version of SkyNet, however. Elon knows how to read a room and he knows when the moment is right to exploit a fad. OpenClaw is a fad—a bad fad. It needs to be completely eradicated from the world before it harms people, like literally kills someone.

Leave it to Musk to take the worst aspects of technology, combine them with the best engineering in the world, and from that soup, concoct something The Joker from Batman would admire. Welcome to the beginning of the AI Texas Chainsaw Massacre, if not a nightmare, then at least an Excedrin moment.

ARTEMIS: DELAYED. We were slated to go back to the moon as early as February 6th, which became Feb. 8th due to weather. Now NASA has announced the launch will be delayed until March, due to problems found in the “wet dress rehearsal.” This has always been the nature of new, complicated missions. If you get a chance, watch the 60 Minutes segment on Artemis. It’s a great primer and covers the extreme (unnecessary?) complexity of our current moon effort.

SOCIAL MEDIA ACCOUNTS: You can follow us on social media at several different locations. Official Racket News pages include:

Facebook: https://www.facebook.com/NewsRacket

Twitter/X: https://twitter.com/NewsRacket

Our personal accounts on the platform formerly known as Twitter:

David: https://x.com/captainkudzu

Steve: https://x.com/stevengberman

Jay: https://x.com/curmudgeon_NH

Tell your friends about us!

OpenClaw was originally named Claudbot, in homage to its model, Anthropic’s Claude. But Anthropic objected, so they changed it to Moltbot, which drew another corporate cease and desist. They finally settled on OpenClaw.

ClawHub is a marketplace that OpenClaw users can access to find new third-party “skills.”

The Fermi Paradox asks why - given the large numbers of galaxies hosting a large number of star systems hosting large numbers of planets - we don't see a Cosmos teeming with intelligence, given that the large numbers in the Cosmos should overwhelm how improbable the existence of intelligent life is. One answer is that there's a Great Filter that all intelligent species must master before they'd be able to make their cosmic presence meaningfully known.

In the past, things like nuclear war, bio-engineering, environmental stewardship have all been advanced as potential Filter candidates. However, given some of the (frankly) insane responses I've seen to AI - OpenClaw being the latest of many - I'm starting to wonder if synthetic intelligence is that Filter.

As for Musk, he's merging his private companies now, given that last week's Tesla earnings revealed a company that completely squandered its first-mover advantages, pissed off its main customer base, and no longer wants to be a car company. With TSLA sinking, he's not going to be able to keep using that as his piggy bank for much longer, but SpaceX still has some juice for the banks willing to make loans against his shares as collateral. xAI "buying" Twitter is how he got out of that pickle he made for himself and investors, and he's replaying the same trick now with SpaceX.

Just last night, my son asked me if I ever heard of molt book. He gave me a brief explanation which sent me on a search, and the first article I read was on Fortune and it was enough to scare me. On Sunday afternoon, I was talking to my brother and he was praising AI and telling me how it was going to save the world. I didn’t want to have an argument and quickly changed the subject. I am debating sending him the article from fortune and your column this morning. It really is too bad we don’t have an agency to govern AI.